Custom attributes, properties and concepts

Dan Selman continues his exploration of conceptual frameworks behind data structures by showing how atttributes and properties are drawn from concepts.

In my job at Docusign I provide pragmatic guidance to teams building various “smart” agreement features, which increasingly require a digital (a.k.a. computable, a.k.a. symbolic) representation of knowledge, facts, and rules; or what is called Knowledge Representation and Reasoning (KRR). In this article I introduce the high-level concepts and challenges, particularly related to “custom attributes” and machine learning.

“Every ontology is a treaty—a social agreement among people with common motive in sharing.”—Tom Gruber

One area where I have seen people struggle is in understanding the difference between a “custom attribute” or “custom property” and a concept. This has been further exacerbated recently by the use of the term “(feature) extraction” for machine learning! We shall return to that topic later…

Going back to basics, as soon as someone mentions a “custom attribute” or “custom property,” the first question to be asked is, “Attribute of what?” There are lots of ways of skinning that cat, but whatever way you slice it (sorry to mix metaphors!) you need to anchor properties against the THINGS that have the properties. The THINGS could be simple resources (for example, the digital twin of entities in the real world) or could be the abstract definitions of concepts:

[Dan] — has property height — [1.8]

[Dan] — has type — [Person]

[Employer] — domain — [Person] // the employer property applies to a Person

[Employer] — range — [Organization] // the value for the employer property must be an Organization

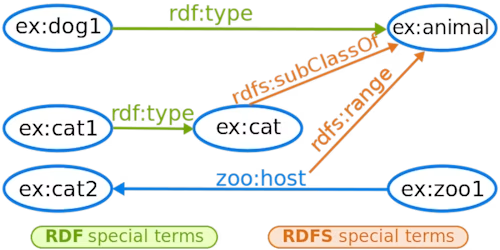

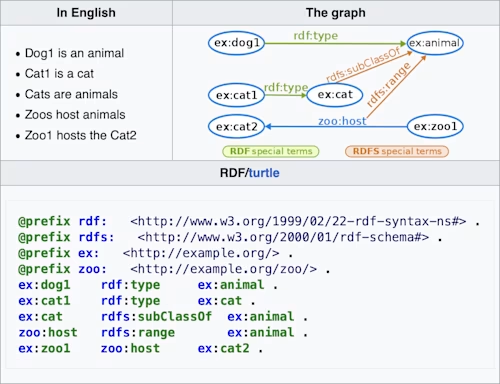

Or, here is another RDF example:

https://en.wikipedia.org/wiki/RDF_Schema

Note that many systems for KRR do not distinguish between what some might call DATA and METADATA, because experience shows that this distinction is typically just one of perspective, or application; and it is very useful to be able to move fluidly from processing data to processing metadata, so a consistent representation for both is convenient. For example, if you are building a task management system, level 0 of your system would represent tasks, task dependencies, and people (Dan needs to buy milk before Thursday); level 1 of your system models these: Dan is a Person, Buy Milk is a Task with a deadline property equal to “Thursday”, while level 2+ of your system may encode constraints or key-performance indicators over level 1: alert a Supervisor if a task is not completed within 24 hours of its deadline, or calculate the aggregate tardiness of all tasks over the past 30 days, or unacknowledged alerts must be escalated to the organization supervisor.

To make property and concept declarations modular and composable, you also need to introduce the concept of a namespace (or universe of discourse). Your definition for the Customer concept may be very different to my definition! How can these two definitions co-exist? George Boole (of Boolean fame) stated this well back in 1854:

In every discourse, whether of the mind conversing with its own thoughts, or of the individual in his intercourse with others, there is an assumed or expressed limit within which the subjects of its operation are confined. The most unfettered discourse is that in which the words we use are understood in the widest possible application, and for them the limits of discourse are co-extensive with those of the universe itself. But more usually we confine ourselves to a less spacious field. Sometimes, in discoursing of men we imply (without expressing the limitation) that it is of men only under certain circumstances and conditions that we speak, as of civilized men, or of men in the vigour of life, or of men under some other condition or relation. Now, whatever may be the extent of the field within which all the objects of our discourse are found, that field may properly be termed the universe of discourse. Furthermore, this universe of discourse is in the strictest sense the ultimate subject of the discourse.

—George Boole, The Laws of Thought. 1854/2003. p. 42

Let’s take a concrete example:

Custom Attribute: CONTRACT_AMOUNT

Type: Monetary Amount

US English Label: “Contract Amount”

What does this attribute definition tell us? I’d argue that, absent any other information, it is telling us very little. In particular, what was the intention behind this attribute and how is it semantically grounded? For example, should I use this attribute on a real estate contract? Should it represent the monthly or yearly rent, or the total expected rental income? How should I use this attribute on an IP contract, or on a purchase order, or an employment contract? I hope you can see that once context (universe of discourse) and owning concept have been stripped away, we are left in a muddle, having to rely on external documentation or guidance for how and when to use the attribute. A more contrived example would be the “Number of Legs” attribute, which could be applied to People, Animals, Furniture or Sports. Does it really make sense for these entities to share this property? To what end? How do we prevent ourselves from comparing 🍏 and 🍊 by using the same symbols, but with completely different meanings?

The risk is that, once divorced from semantic meaning, it becomes trivial to aggregate or compare things purely syntactically—for example, to create a time series that shows the aggregate CONTRACT_AMOUNT for all contracts. This will be misleading at best!

The solution in this instance is to introduce a concept of a Real-Estate Contract that has a property Yearly Rental Income. The Real-Estate Contract concept is defined in the org.acme namespace to make explicit that this is Acme’s definition of a real-estate contract (and there may be other definitions). It becomes easy to compare or aggregate Acme Real-Estate Contracts (or their specializations), but hard to compare an Acme Real-Estate Contract with an Acme Employment Contract.

In the introduction I mentioned “extractions”, so let’s return to that: machine learning (ML) engineers and data scientists train ML models to extract features from data: perhaps extracting the CONTRACT_AMOUNT from a PDF document. In essence, the labelled training set used to create the ML model defines the universe of discourse and the labels supply the semantic meaning of the feature. Did the training set contain documents that represent 🍏 and 🍊? Where the humans (or machines…) consistent in how they labelled the attribute? Does it make sense to apply the trained ML model to different universes of discourse? Does the system warn me if I attempt to use a ML model outside of its universe of discourse?

Our whistle-stop tour of some of the issues complete, let’s bring this back to pragmatic engineering requirements.

Primary focus for our knowledge representation must be to ensure that it is easily understood by stakeholders: end-users using the knowledge, or application developers building knowledge-centric applications. For better, or worse, this places us in either the object-oriented or the tabular/relational model, as these are the only knowledge representations taught to most undergraduates, programmers and business people. In the relational model, we define the structure of tables and the relationships between tables and then create rows within those tables to store instances. In the OO model, we define the structure of classes in terms of properties, and relationships between classes, and then create instances of classes which can be stored in-memory, on disk, or serialised to object or document databases.

We need a computable representation of the structure of data, first and foremost, with a simple “instance of” property between resources and the classes: [Dan] is an instance of [Person].

We need to be able to attach properties to classes: [Person] has a numeric property height.

We need meta-properties on properties, to express things like validation constraints: [Person] has a numeric property height which must be greater than zero

We need to capture unary and _N_ary relationships between classes: [Person] is related to zero or more [Person] entities via the [children] property

It is useful to be able to decorate classes, namespaces and properties with arbitrary meta-attributes, as an escape hatch extensibility mechanism.

Abstract classes and super-classes are useful to promote reuse and specialisation: [Animal] is an abstract concept. A [Dog] is an [Animal].

Namespaces are used to define discrete universes of discourse, and prevent naming collisions.

Imports of types from external namespaces promote modularity and reuse.

Our ML models should be bound to properties of concepts, so we don’t inadvertently use a model trained on 🍏 to produce 🍊.

Dan Selman has over 25 years experience in IT, creating software products for BEA Systems, ILOG, IBM, Docusign and more. He was a Co-founder and CTO of Clause, Inc., acquired by Docusign in June 2021.

Related posts

Discover what's new with Docusign IAM or start with eSignature for free